MVE441 / MSA220 Statistical learning for big data

统计学习代写 Here are some pointers for the exercises. We do not provide solution templates since there are many ways to tackle the problems.

Here are some pointers for the exercises. We do not provide solution templates since there are many ways to tackle the problems. However, we provide some guidelines as to the minimum requirements and point to some common but serious mistakes.

Exercise 1 统计学习代写

First, you were required to perform some exploratory analysis of the data. This does not mean that boxplots, histograms or cluster analysis suffice. You have to keep the primary task in mind:

classification and feature selection. What you should note as you explore the data:

- the classes overlap: there are several weak effects but the majority of features do not correlate strongly with the class label (you can assess this via correlation tests, rank tests, running single variable logistic regression, etc).

- PCA does not provide an informative low-dimensional data for classification as classes are not separated in the leading PC components

- Features differ in scale and distribution – some are very long-tailed with large values while most are not. You may consider transforming variables to enhance the association between the response and features.

- There are features that are moderately to strongly correlated with each other. This may affect the stability of feature selection. Without any domain knowledge it is difficult to consider these “groups” or pre-select based on correlation alone.

- The classes are imbalanced: around 75% of the training data belong to class 0. You need to consider this when training your classification models. 统计学习代写

You were required to analyze the data with at least two different classification methods. You were expected to base your choices on the exploratory analysis or otherwise motivate your choices. The key element to this part of the exercise is to handle the class imbalance. What does this mean? Well, accuracy is not an appropriate metric for this task since you will achieve a “good” performance by simply predicting class 0 everywhere. There are many ways to approach this problem and you were expected to do at least one of the following;

- Use an alternative cross-validation metric when tuning your methods (e.g. F1, AUC, MCC)

- Under- or over-sampling the majority or minority class, respectively.

Some methods are more sensitive to the class imbalance than others. For instance, Random Forest does not do well unless the class imbalance is carefully addressed – a result of many weak effects making greedy training in CART difficult. If you ran RF at default settings when tuning the method this results in a 0-everywhere classifier.

You were expected to notice the imbalance and address it using variants of the approaches listed above. You were expected to clearly state how your methods were tuned, which validation metric was used for tuning and clearly compare the results between the methods used. Note, R2 and MSE are not metrics one would use for classification. Also note, presenting results without commenting on them does not suffice. Finally, discussion on method performance needs to be backed up by results in either table or figure format and clearly stated in your report. Finally, you cannot use the validation data to tune your methods – this biases the results against simple methods and favors complex ones in addition to overestimating the performance of the methods on future data. 统计学习代写

The final task was to look into feature selection and specifically address how confident you are in the identification of relevant features for classification. This task could also be approached in multiple ways but should be guided by the results from task 2 above. If you in task 2 noted that RF performed poorly and favored another method, then clearly RF variable importance is not an appropriate method for identifying the most relevant features. If you addressed the imbalance in task 2, RF can provide some insight into relevant features. However, what you were expected to do was provide a measure/comment on your confidence in the selection.

While many methods provide feature ranking (variable importance, coefficient magnitudes or p-values, etc) you should have noted in task 1 that there were several correlated features present and this may impact the stability of feature selection. By performing repeated feature selection on bootstrap training data you can observe how stable the model selection is. RF variable importance on single runs tended to vary quite a lot (and again, depending on if you addressed the imbalance or not, these metrics carry limited information). Feature selection using e.g. sparse logistic regression tends to be more stable with a subset of features selected in close to 100% of bootstrap samples.

For this task you were thus expected to comment on the relative ease/difficulty of identifying important features using the different classification methods (i.e. if some features stand out). In addition, to say something about the confidence in your feature selection you needed to look into the stability of the feature identification.

Exercise 2

Some guidelines on how you could have tackled exercise 2 can be found in this PDF .

Exercise 3 统计学习代写

In this exercise you were expected to compare the ordinary Lasso to the Adaptive Lasso in simulations.

For the simulations, you were expected to simulate data from a sparse linear regression model. The assignment already contained hints on what a good setup would be, but some general notes:

Sparse means many zeros and few non-zeroes (there was some confusion with the term, see the note at the end)

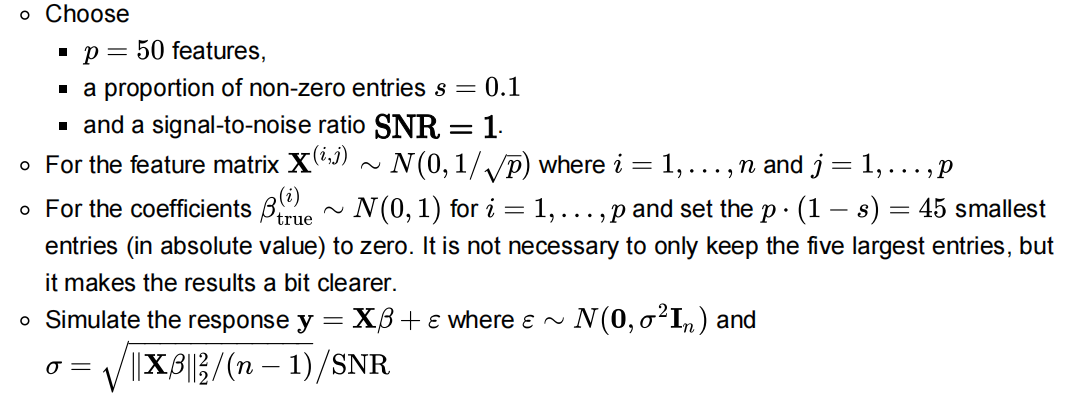

A setup that would have made it easy to see good results would have been

You were then supposed to

repeatedly simulate from this sparse linear regression model for varying values of n ,

estimate the coefficients with the ordinary Lasso and the adaptive Lasso

compare how well the methods recover the set ![]()

Concerning the repeated simulations:

You had to explore the simulations for

- different values of (at least four or five different values, ideally at least up to to see proper results), and

- repeat the simulations for the same value of multiple times (about 25 times would have been enough here) to account for variability in the simulations.

Two approaches were equally valid here. You could simulate a single once for both 1. and 2., in the beginning of the exercise, and use it throughout or simulate new for each iteration in 2 .

Note however that needs to be the same when exploring different values of to fully explore the influence of n .

Concerning the estimation of coefficients: 统计学习代写

The ordinary Lasso can be estimated with out-of-the box methods in most software packages. The crucial thing here was proper hyperparameter selection, but this was done correctly by pretty much everybody. The tricky thing was the adaptive lasso: As noted in the description of the exam, the hyperparameters (which appears in the weights of the adaptive lasso) and the penalisation parameter for the lasso step, let’s call it , need to be selected jointly. This means, the best solution is a cross validation of on a grid for each new dataset. There are a couple of things to note that were commonly done wrong:

Simply fixing to one value across the whole exercise leads to sub-optimal solutions and was considered somewhat lazy

Investigating the results of different fixed values of was a bit better, but also leads to suboptimal results, since each dataset has an optimal combination of and this can vary from simulation to simulation even for fixed .

There is seemingly a shortcut to the cross-validation, which is described in the paper that was linked from the exam description. For a sequence of parameters, 统计学习代写

- fix a in the sequence,

- use a built in method for cross-validation of (e.g. LassoCV on modified data or cv.glmnet with penalty.factor),

- save the MSE achieved for the optimal , i.e. as a function of , and finally

- choose the combination that led to the minimal MSE.

This strategy is almost correct. The major drawback is that the cross-validation is inside the loop over the sequence and not outside. Each time methods such as LassoCV or cv.glmnet are fitted, they create new folds. The MSE computed for each is therefore not directly comparable among s since these were computed across different folds. To do this correctly, one would have had to

- create folds manually,

- fix a in the sequence,

- fit a regularisation path of the lasso (using e.g. Lasso on the modified data or glmnet with penalty.factor) for a sequence of values for (these do not necessarily have to be the same across the sequence of s),

- compute the MSEs for the sequence of s across the folds defined at the beginning

- save the MSE achieved for the optimal , i.e. as a function of , and finally

- choose the combination that led to the minimal MSE.

Metric for training of Lasso/AdaLasso: 统计学习代写

By definition of the method, the Lasso and AdaLasso are usually trained on the prediction MSE on a test set (test folds). Other metrics are possible (i.e. when using logistic regression with a lasso penalty then some measure of classification quality can be better), however, it is wrong to use a measure that knows about. If the lasso and adaptive lasso are trained on a metric that e.g. computes the similarity of the estimated coefficient vector and, then additional information about is passed to the method. However, the whole point of this exercise was to see how Lasso and AdaLasso compare at reconstructing without explicit knowledge about it.

Concerning the comparison of Lasso to AdaLasso:

There was a multitude of ways how you could compare the two methods. A suggestion on a straight forward solution would have been: After estimating and , interpret the non-zero elements in all three coefficient vectors (including ) as positives in a binary classification problem and the zeros as negatives. You can then investigate measures such as

- sensitivity, measuring the ability of the Lasso/AdaLasso to set the elements in , i.e. the entries that are truly non-zero in the ground-truth, as non-zero

- specificity, measuring the ability of the Lasso/AdaLasso to set elements that are truly zero in the ground-truth to zero.

These two measures would have been enough to give a full picture, but many other options are possible. A perfect procedure would lead to sensitivity 1 and specificity 1, i.e. it finds all non-zero elements in and sets all elements outside of to zero.

You should have seen the following: 统计学习代写

Sensitivity (or some proxy measure for it) converges quickly to 1 for both methods Specificity for the ordinary Lasso increases for low but then stagnates. Not setting enough coefficients to zero is the major problem why the ordinary Lasso is not an oracle procedure. For the adaptive lasso, the specificity should converge to 1.

The main points you should have discussed are the inability of the lasso to set truly zero elements to zero (but often to small values) and the edge of the adaptive lasso to detect these coefficients and set them correctly to zero due to the additional information the adaptive lasso receives from the weights.

Note: In the very beginning of the exam I used the word sparsity incorrectly. Note however that the description itself (with the hint of setting elements to non-zero) was correct otherwise. I corrected submissions with this misunderstanding mildly.

If you ran your simulations as above but with 90% non-zero elements instead of 10% non-zero elements, you should have made the following observations (potentially in different metrics, but the overall picture is the same) 统计学习代写

- Sensitivity for ordinary lasso still approaches 1, whereas it stagnates below 1 after an initial increase for the adaptive lasso. This means that the lasso still sets all truly non-zero coefficients to non-zero, whereas the adaptive lasso sets truly non-zero coefficients to zero. Since the sparsity assumption (many zeros, few non-zeros) is severely violated here, neither of these methods is ideal, but the lasso performs better with respect to this metric.

- Specificity for the ordinary lasso starts off alright but then decreases towards zero, meaning that the 10% zero elements that existed are slowly being set to something non-zero, this is most likely because the lasso degenerates to OLS for increasing in this dense setting. The specificity of the adaptive lasso however goes towards 1 as in the sparse case described above, i.e. the adaptive lasso should find most of the 10% truly zero elements and set them to zero.